What is True About Using Text to Image Generation Services? A Complete 2026 Guide

Last Updated: 2026-01-22 18:07:40

If you're here from a quiz or course assignment, here's your answer: Text to image generation services are dependent on dataset quality. That's option C, and it's the correct answer.

But if you actually want to understand why this matters and how to use these tools effectively, keep reading. This guide explains what's really happening when you type "a cat wearing a crown" and get an image back in seconds.

Quick Navigation

- The Core Answer Explained

- Five Essential Truths

- Why Dataset Quality Actually Matters

- Common Misconceptions

- Comparing Major Services

- Practical Usage Tips

- What's Next for This Technology

Understanding the Core Answer

Text to image AI tools like DALL E, Midjourney, and Stable Diffusion all share one fundamental characteristic: they're only as good as the data they learned from.

Think of it this way: if you tried to teach someone to draw, but only showed them pictures of dogs, they'd struggle when you asked them to draw a horse. The same principle applies to AI image generators. The training dataset those millions of images the AI studied determines what it can and can't create effectively.

Why This Is The Test Answer

Most courses teaching generative AI emphasize dataset quality because it's the single most important factor that newcomers misunderstand. People often think the algorithm (the "brain" of the AI) matters most, but even the smartest algorithm produces poor results when trained on limited or biased data.

The other common answer choices you might see:

- "They value design and art sensitivities" Not exactly. AI doesn't "value" anything; it mimics patterns it learned.

- "They are not dependent on algorithm quality" False. Both algorithm and data matter, but data is more fundamental.

Five Essential Truths About Text to Image AI

- Training Data Determines Everything

The dataset used to train an AI image generator shapes its entire capability. Here's what actually happens:

High quality datasets produce better results. Services trained on diverse, accurately labeled images from sources like LAION 5B (which contains over 5 billion image text pairs) can handle a wider range of prompts. If the training set contains mostly Western art styles, you'll notice the AI struggles with other cultural aesthetics.

Example from real usage: When DALL E first launched, users noticed it handled common objects exceptionally well but struggled with specific cultural references or technical diagrams. This wasn't an algorithm problem it reflected gaps in the training data.

The data shortage problem: Researchers are now facing a challenge. According to recent studies, we're running low on high quality human created images available for training. Some estimates suggest we might exhaust easily accessible training data within the next few years, which could slow down improvements.

- Algorithm Quality Still Matters (A Lot)

Despite what some quiz answers suggest, you can't ignore the algorithm. The relationship works like this:

- Dataset = Raw ingredients

- Algorithm = Recipe and cooking technique

You need both. The best dataset won't help if processed by a poor algorithm. Modern services use different approaches:

- Diffusion Models (Stable Diffusion, DALL E 3): Currently produce the most photorealistic results

- GANs (older technology): Faster but less consistent

- Transformers (like DALL E's original architecture): Better at understanding complex text prompts

- These Tools Don't Actually "Understand" Art

When you read that an AI "creates art," remember what's actually happening. The system learned statistical patterns from millions of images. It doesn't experience creativity, emotion, or artistic intent.

This matters practically because:

- You can't explain abstract concepts the way you could to a human artist

- The AI won't innovate beyond combinations of what it's seen

- Cultural context often gets lost or misinterpreted

However, this doesn't make the tools less useful, just different from human artists.

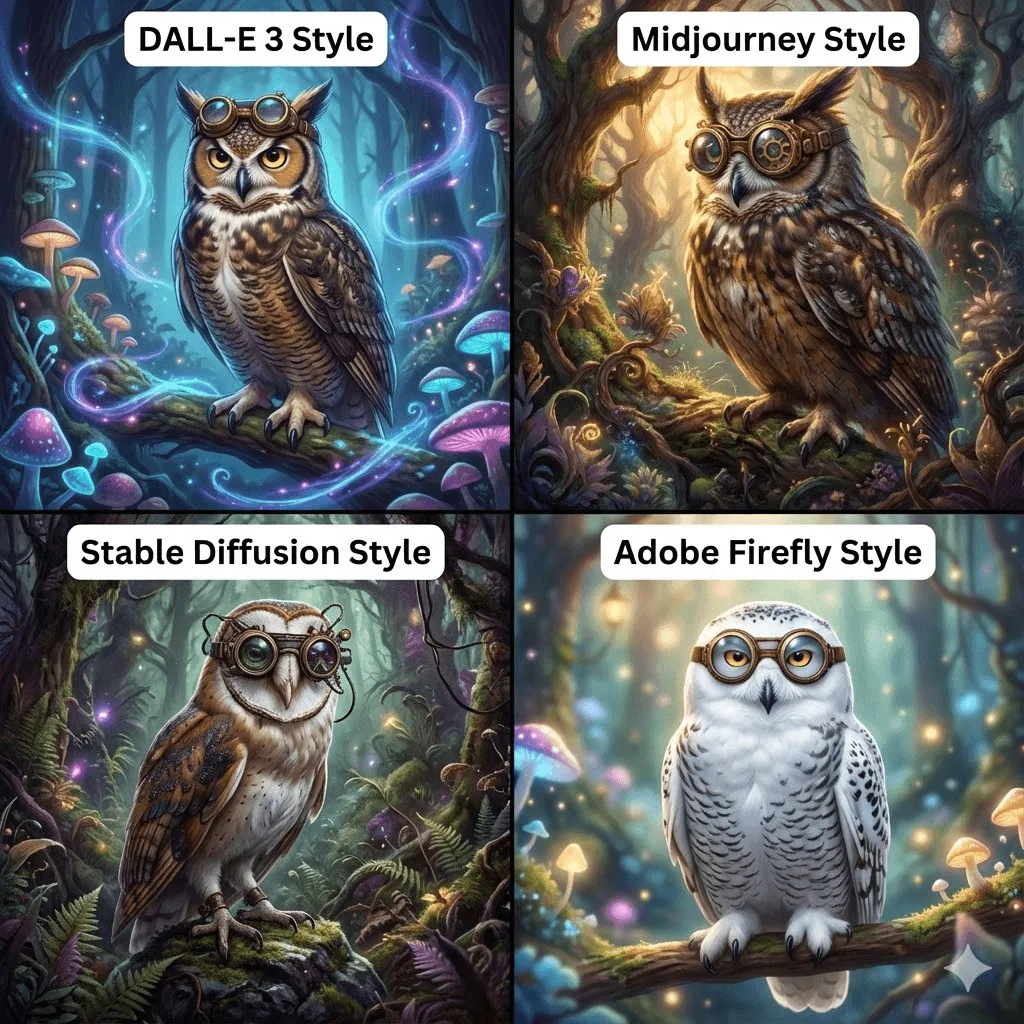

- Results Vary Significantly Between Services

Not all text to image generators perform equally, mainly due to differences in their training data:

DALL E (OpenAI): Trained on curated datasets with emphasis on safety and prompt accuracy. Generally excels at following detailed instructions and avoids generating problematic content.

Midjourney: Built with an emphasis on aesthetic quality. Many users notice it produces more "artistic" looking images, likely because its training dataset prioritized visual appeal over photorealism.

Stable Diffusion: Uses the openly available LAION dataset. More versatile but requires more prompt engineering skill to get consistent results.

Adobe Firefly: Trained exclusively on licensed Adobe Stock images and public domain content. This means it's safer for commercial use but may have less variety in output styles.

- Getting Good Results Takes Practice

First attempts rarely produce exactly what you want. Professional users typically:

- Generate 10 20 variations of the same concept

- Spend time refining their prompts based on results

- Learn which descriptive words work best for their chosen service

- Combine AI generation with traditional editing tools

Karen X Cheng, who created the first AI generated magazine cover for Cosmopolitan, mentioned that while each generation took about 20 seconds, the final image required hundreds of attempts and hours of prompt refinement.

Why Dataset Quality Actually Matters

Let's get specific about how training data affects your results.

The Direct Connection

When you type a prompt like "a red bicycle in Paris," the AI isn't searching a database of existing images. Instead, it's learned patterns from its training data about what "red," "bicycle," and "Paris" look like visually, and it's creating a new image based on those learned patterns.

If the training data is extensive and varied: The AI has seen thousands of bicycles from different angles, in different styles, with different colors. It has seen Paris streets, the Eiffel Tower, French architecture. It can combine these elements effectively.

If the training data is limited: Maybe it only saw a few bicycles, mostly from one angle. Perhaps it saw few images of Paris. The result will be less accurate, potentially mixing inappropriate elements, or producing a generic cityscape that doesn't really look like Paris.

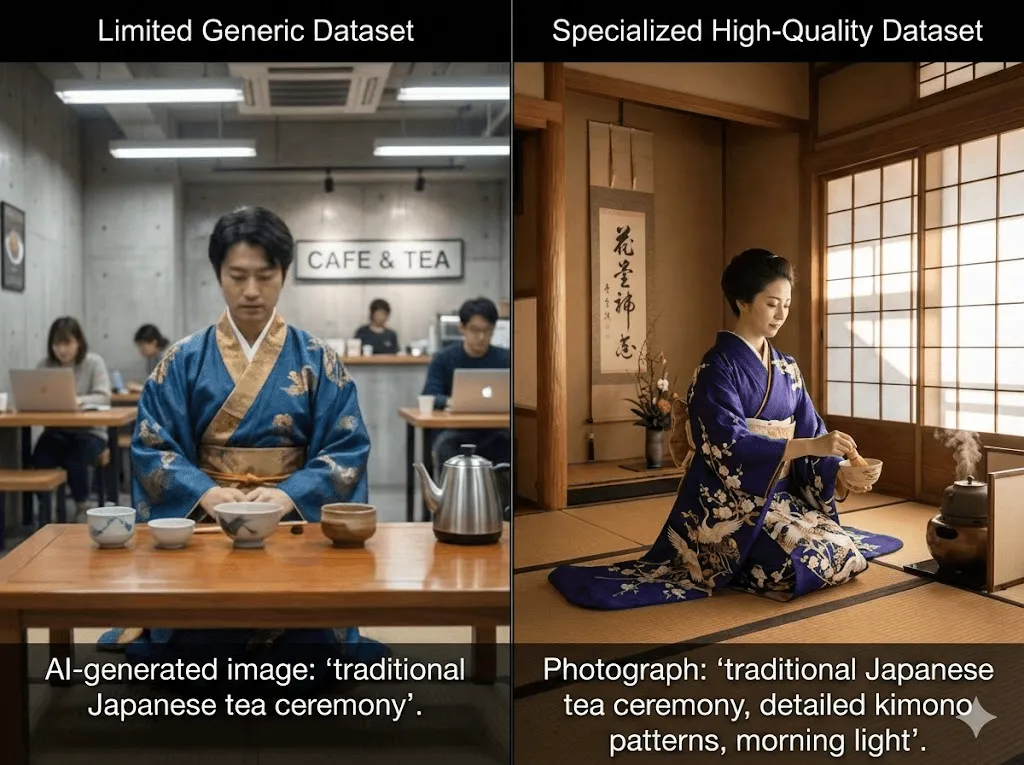

Real World Impact: A Comparison

I tested this with different services using the same prompt: "traditional Japanese tea ceremony, morning light, detailed kimono patterns."

- DALL E 3: Produced accurate results with proper cultural elements

- Stable Diffusion (standard model): Mixed Japanese and Chinese elements, suggesting less specialized training data

- Specialized model trained on Asian art: Best results, most culturally accurate details

This demonstrates how dataset composition directly affects output quality for specific use cases.

The Bias Problem

Training data also introduces bias. Several studies have examined what happens when you ask different AI image generators to create images of "a doctor" or "a CEO":

- Many services disproportionately generate images of men

- Racial diversity often doesn't match real world demographics

- Cultural stereotypes sometimes appear in the results

These aren't algorithm failures they're reflecting biases present in the training data, which often comes from internet sources that don't represent balanced demographics.

Technical Metrics

Researchers use several measurements to evaluate how dataset quality affects performance:

FID Score (Fréchet Inception Distance): Measures how similar generated images are to real images. Lower is better. Studies show that training on higher quality datasets consistently produces lower FID scores.

CLIP Score: Measures how well generated images match their text prompts. Again, dataset quality directly correlates with better scores.

When Stable Diffusion trained on the LAION 5B dataset, their FID scores improved significantly compared to models trained on smaller datasets direct evidence that dataset scale and quality matters.

Common Misconceptions

"These tools will replace human designers"

Not likely. Current reality shows these tools work best as assistants, not replacements. Professional designers use them to:

- Quickly generate concept variations

- Create reference images for client discussions

- Speed up repetitive tasks

- Explore visual directions faster

But they still need human judgment for final selection, editing, and ensuring the result actually communicates the intended message.

"Just type what you want and get perfect results"

If only. Professional quality results typically require:

- Understanding how your chosen service interprets different words

- Testing multiple variations

- Refining prompts through iteration

- Sometimes combining multiple generated images

- Post processing in traditional editing software

The democratization isn't about eliminating skill it's about changing what skills matter.

"All services produce similar quality"

Dataset differences create substantial variation between services. Testing the same prompt across platforms often yields surprisingly different results:

- Photorealism varies significantly

- Artistic style interpretation differs

- Handling of complex scenes shows different strengths

- Some services excel at specific content types (portraits vs. landscapes vs. technical illustrations)

"Free services are just as good as paid ones"

Free tiers usually mean:

- Limited generations per day

- Lower resolution outputs

- Longer processing queues

- Restricted commercial usage rights

- Less control over generation parameters

Paid versions typically use better models (meaning better training data and more computational resources) and offer more features.

"Generated images are always obviously AI made"

This used to be true. Early tell tale signs included:

- Distorted hands with wrong numbers of fingers

- Nonsensical text in images

- Weird artifacts around edges

- Uncanny valley faces

Modern services have largely fixed these issues through better training data that includes more examples of hands, text, and facial features. Many generated images now pass casual inspection.

Comparing Major Services

Here's how the main services differ, primarily due to their training data choices:

DALL E 3 (OpenAI)

Training approach: Curated datasets with emphasis on quality and safety

Strengths:

- Excellent prompt following

- Consistent results

- Strong safety filters

- Good with text in images

Best for: Professional content creation, marketing materials, situations requiring accuracy

Limitations: Can be overly cautious with content restrictions

Midjourney

Training approach: Aesthetics focused curation

Strengths:

- Superior artistic quality

- Consistent style

- Excellent color and composition

- Strong community with shared learning

Best for: Artistic projects, concept art, visually striking imagery

Limitations: Less precise prompt following, requires Discord

Stable Diffusion

Training approach: Open source LAION dataset

Strengths:

- Highly customizable

- Can run locally

- Active development community

- Free to use base models

Best for: Developers, researchers, users wanting full control

Limitations: Requires more technical knowledge, inconsistent out of the box results

Adobe Firefly

Training approach: Only licensed and public domain content

Strengths:

- Commercial usage safety

- Integration with Adobe tools

- No copyright concerns

- Enterprise friendly

Best for: Business applications, commercial projects, corporate environments

Limitations: Less variety in outputs compared to models trained on broader internet data

Quick Comparison Table

| Feature | DALL E 3 | Midjourney | Stable Diffusion | Adobe Firefly |

| Ease of use | High | Medium | Low | High |

| Output quality | Excellent | Excellent | Variable | Good |

| Customization | Limited | Medium | Extensive | Medium |

| Commercial safety | Good | Check TOS | Varies by model | Excellent |

| Cost | Pay per image | Subscription | Free (base) | Subscription |

| Best for | Accuracy | Aesthetics | Flexibility | Business |

Practical Usage Tips

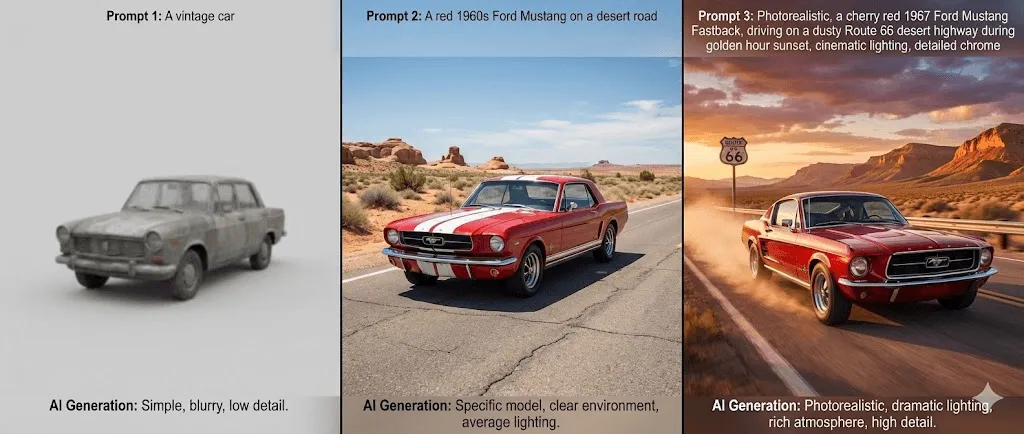

Writing Better Prompts

Good prompts make the difference between mediocre and excellent results. Based on real usage:

Be specific about what you want:

- Weak: "a car"

- Better: "a red sports car"

- Best: "a red Ferrari 488 GTB, side view, sunset lighting, on a coastal highway, photorealistic style"

Include style references when relevant:

- "in the style of Studio Ghibli animation"

- "photograph taken with a Canon 5D Mark IV, 85mm lens, f/1.8"

- "oil painting with visible brushstrokes"

- "clean vector illustration, flat design"

Specify composition:

- "centered composition"

- "rule of thirds"

- "close up portrait"

- "wide establishing shot"

Learning Your Service's Language

Each platform responds differently to similar words. Through testing:

DALL E responds well to:

- Photographic terms (focal length, aperture)

- Specific named styles

- Detailed scene descriptions

Midjourney responds well to:

- Artistic movement names (art nouveau, baroque)

- Quality descriptors (intricate, detailed, atmospheric)

- Lighting descriptions

Stable Diffusion benefits from:

- Artist names in the training data

- Technical parameters in the prompt

- Negative prompts (describing what you don't want)

Iteration Strategy

Professional workflow typically looks like:

- Start with a simple, clear prompt

- Generate 4~5 variations

- Pick the best result

- Refine the prompt based on what worked

- Generate another batch

- Repeat until satisfied

This usually takes 3~5 iterations for professional quality results.

Common Problems and Solutions

Problem: Generated hands look wrong

- Solution: Use prompts that hide hands or specify "hands in pockets" or similar

- Better: Some newer models handle hands well; try DALL E 3

Problem: Text in images is gibberish

- Solution: Most services struggle with text; add it in post processing

- Better: DALL E 3 has improved text capabilities; specify exactly what text you need

Problem: Images lack the desired mood

- Solution: Add specific lighting and color palette descriptors

- Example: "golden hour lighting, warm color palette" or "moody, desaturated colors"

Problem: Results are too generic

- Solution: Add more specific details, reference specific artists or styles, include unique elements

Commercial Use Considerations

Before using generated images commercially:

- Check the service's terms of service

- Understand what rights you have to generated content

- Consider whether your image might include copyrighted elements from training data

- For important commercial projects, services trained on licensed data (like Firefly) reduce risk

- Keep records of your generation process and prompts

What's Next for This Technology

The Data Challenge

The most significant upcoming challenge isn't algorithmic it's data availability. Researchers estimate that high quality, easily accessible training data might become scarce by 2026~2027.

Why this matters:

- Future improvements may slow without new quality training data

- Services might need to license data more formally

- Synthetic data (AI generated images used for training) risks "model collapse"

Potential solutions being explored:

- Better compensation for content creators whose work trains AI

- More efficient learning from smaller datasets

- Improved synthetic data generation techniques

Emerging Capabilities

Near term developments to watch:

Better temporal consistency: Current models generate each image independently. New approaches aim for consistent characters and styles across multiple images crucial for storytelling and branding.

Fine grained control: Beyond text prompts, new interfaces allow adjusting specific elements (change only the lighting, modify just the background, swap one object for another).

Real time generation: Speeds are already improving. We're moving toward near instantaneous generation, enabling new interactive applications.

Specialized models: Rather than one model for everything, expect more domain specific versions trained on specialized datasets (medical imaging, architectural visualization, scientific diagrams).

Regulatory Landscape

Expect increasing regulation around:

- Training data transparency: Requirements to disclose what data trained a model

- Watermarking: Invisible markers identifying AI generated images

- Content authenticity: Standards for marking synthetic media

- Copyright clarification: Legal frameworks for AI generated content ownership

Impact on Creative Fields

The technology isn't replacing human creativity it's changing how creative work gets done:

Design and advertising: Faster iteration and concept exploration, but increased need for creative direction and taste.

Entertainment: AI assisted concept art and pre visualization, but human artists still needed for final production.

Education: New tools for visual learning and explanation, though concerns about student over reliance.

Science and medicine: Accelerating visualization of complex data and theoretical scenarios.

Frequently Asked Questions

Q: Can I use AI generated images without copyright concerns?

A: It's complicated. The legal status varies by jurisdiction and continues evolving. Generated images themselves typically aren't copyrighted, but:

- Training data might include copyrighted material

- Service terms of use vary

- Commercial use often requires paid licenses

- For important projects, consult legal advice

Q: How do these services actually work technically?

A: Most modern services use diffusion models. In simple terms:

- The text prompt gets encoded into a mathematical representation

- The model starts with random noise

- Through many steps, it gradually reduces noise while steering toward images matching the prompt

- The final step produces the actual image

Q: Why do different services give different results for the same prompt?

A: Mainly because of training data differences. Each service learned from different images, leading to different visual "understanding." Algorithm differences also play a role, but data matters more.

Q: Is dataset quality the only thing that matters?

A: No, but it's the most fundamental factor. You also need:

- Good algorithm design

- Sufficient computational resources

- Effective training processes

- Well designed user interfaces

But even the best algorithm produces poor results with bad training data.

Q: How can I tell if an image was AI generated?

A: It's getting harder, but look for:

- Unnatural textures or patterns

- Inconsistent lighting

- Strange details that don't make physical sense

- Text that's almost right but slightly wrong

- Repetitive patterns that seem "too perfect"

However, modern services increasingly avoid these tells.

Q: Will these tools continue improving?

A: Yes, though the pace might change. Improvements depend on:

- Access to quality training data (the current challenge)

- Computing power advances

- Algorithm innovations

- Solving current limitations (consistency, control, understanding)

Key Takeaways

If you came here for a quiz answer, you have it: text to image generation services are dependent on dataset quality.

But understanding why this answer matters gives you insight into:

- How to choose the right service for your needs

- What results to expect and why services differ

- How to write better prompts

- What limitations to expect

- Where this technology is headed

The fundamental truth hasn't changed since these tools emerged: they're only as capable as the data they learned from. That's why services with better, more diverse, more carefully curated training datasets consistently produce better results.

As you use these tools, remember that they're assistants, not replacements for human creativity and judgment. The best results come from understanding both their capabilities and limitations and those capabilities and limitations stem directly from the quality and nature of their training data.

Whether you're a student completing an assignment, a professional exploring new tools, or just curious about AI, this foundation helps make sense of how these remarkable systems work and what they can accomplish.

Further Reading

For students: If this topic interests you, explore courses on machine learning fundamentals, computer vision, and generative AI. Understanding the underlying technology helps you use these tools more effectively.

For professionals: Consider experimenting with multiple services to understand their different strengths. Most offer free trials or limited free tiers.

For everyone: Stay informed about developments in AI ethics, copyright law related to AI, and the ongoing conversations about appropriate use of these technologies.

The field moves quickly, but the core principle remains constant: the quality of what comes out depends on the quality of what went in.

Last updated: December 2025Note: AI technology evolves rapidly. Some specific details about services and capabilities may change over time, but the fundamental principles explained here remain relevant.