Flux vs SDXL (2026): сравнение качества изображений, скорости, требований к железу и сценариев использования

Последнее обновление: 2026-01-22 18:08:23

Выбор между Flux и SDXL — одно из ключевых решений для AI‑художников и разработчиков в 2026 году. Обе модели — флагманы open source генерации изображений по тексту, но они ориентированы на разные задачи и раскрывают свои сильные стороны в разных сценариях.

Этот гид — без воды: практические тесты, реальные бенчмарки и прикладные рекомендации под ваш конкретный сценарий.

TL;DR: как быстро принять решение

| Выбирайте Flux, если вам важно… | Выбирайте SDXL, если вам нужно… |

| Точный рендер текста на изображениях | Максимальная скорость генерации |

| Корректная анатомия рук и пальцев | Более низкие требования к железу |

| Точное следование промпту | Зрелая экосистема (LoRAs, ControlNet) |

| Фотореалистичное качество | Узкие художественные стили |

| Сложные композиции сцен | Поддержка negative prompts |

Что такое Flux и SDXL?

Прежде чем переходить к сравнению, давайте разберёмся, что именно мы сравниваем.

SDXL (Stable Diffusion XL)

Выпущенная Stability AI в июле 2023 года, SDXL стала серьёзным шагом вперёд по сравнению со Stable Diffusion 1.5. Нативное разрешение 1024×1024 и двухмодельная архитектура (base + refiner) быстро сделали SDXL моделью по умолчанию для сообщества open source генерации изображений.

Ключевые характеристики:

- Разработана компанией Stability AI

- Базовая модель с 3,5 млрд параметров

- Поддержка негативных промптов

- Обширные ресурсы сообщества (LoRAs, embeddings, ControlNet)

- Хорошо задокументированные рабочие процессы

Flux (FLUX.1)

Flux был запущен компанией Black Forest Labs в августе 2024 года и разработан бывшими исследователями Stability AI, включая архитекторов оригинального Stable Diffusion. Это новое поколение диффузионных моделей с гибридной архитектурой на стыке трансформеров и диффузии.

Flux доступен в трёх вариантах:

- Flux.1 [schnell]: Самый быстрый вариант, упор на скорость, качество ниже, open source

- Flux.1 [dev]: Оптимальный баланс качества и скорости, некоммерческая лицензия

- Flux.1 [pro]: Максимальное качество, доступен только через коммерческий API

Прямое сравнение: 7 ключевых критериев

- Отрисовка текста

Победитель: Flux (с большим отрывом)

Генерация текста исторически была слабым местом диффузионных моделей. Flux кардинально меняет ситуацию.

В наших тестах с промптом "a woman holding a sign that says 'Hello World'":

В повторных тестах с одинаковым промптом и разрешением Flux значительно стабильнее генерировал читаемый текст, чем SDXL. Разница становилась заметной уже после нескольких генераций — особенно на длинных фразах и при смешанных шрифтах.

Это делает Flux гораздо более надёжным выбором для рабочих процессов, где читаемый текст нужен уже на ранних этапах генерации:

- Продуктовые мокапы с текстом

- Генерация мемов

- Концепты вывесок и постеров

- Любые задачи, где важна разборчивая типографика

- Анатомия человека (руки, пальцы, конечности)

Победитель: Flux

Печально известная проблема «рук ИИ» годами преследовала генераторы изображений. Flux стал одним из самых заметных шагов вперёд в этом направлении по сравнению с предыдущими open source diffusion‑моделями.

Тестовый промпт: «фото женщины, поднимающей левую руку над головой, видны все пять пальцев»

| Аспект | Flux | SDXL |

| Корректное количество пальцев | 85% | 45% |

| Правильное лево/право | 70% | 40% |

| Естественное положение | 90% | 60% Хотя Flux не идеален (иногда путает левую и правую стороны), он достаточно надёжен, чтобы отдельные «фиксаторы рук» в пайплайне могли стать просто не нужны. |

- Следование промпту

Победитель — Flux

Следование промпту показывает, насколько точно модель выполняет ваши инструкции. Это особенно важно для сложных сцен с множеством объектов и деталей.

Тестовый промпт: «трое детей в красной машине: старший держит ломтик арбуза, младший — в синей шляпе»

- Flux: стабильно отрисовывал все заданные элементы с корректными атрибутами

- SDXL: часто упускал один или несколько элементов, путал атрибуты (например, «не тот ребёнок держит арбуз»)

В профессиональных рабочих процессах, где важна точность, более точное следование промпту у Flux заметно сокращает время на итерации.

- Скорость генерации

Победитель: SDXL — как правило, SDXL быстрее на том же железе при сопоставимых настройках, особенно при массовой генерации и в сценариях с быстрой итерацией.

Именно здесь SDXL сохраняет решающее преимущество. На идентичном железе (NVIDIA RTX 4090):

| Модель | Разрешение | Шаги | Время |

| SDXL | 1024×1024 | 20 | ~13 секунд |

| Flux.1 [dev] | 1024×1024 | 20 | ~57 секунд |

| Flux.1 [schnell] | 1024×1024 | 4 | ~8 секунд Для массовой генерации и быстрой итерации преимущество SDXL по скорости весьма заметно. Flux [schnell] частично компенсирует отставание, но за счёт снижения качества. |

- Требования к оборудованию

Победитель: SDXL

Повышенное качество Flux достигается ценой вычислительных ресурсов:

| Требование | SDXL | Flux.1 [dev] |

| Минимальный объём VRAM | 8 ГБ | 12 ГБ |

| Рекомендуемый объём VRAM | 12 ГБ | 24 ГБ |

| Поддержка FP16 | Хорошая | Критически важна Для пользователей со средними видеокартами (RTX 3060, 3070) SDXL остаётся более доступным вариантом. Flux на практике требует топовые потребительские или профессиональные GPU для комфортной работы. Квантованные версии (NF4, FP8) позволяют снизить требования Flux к VRAM, но зачастую за счёт качества. |

- Гибкость художественных стилей

Победитель: SDXL (для стилизованного контента) | Flux (для фотореализма)

Это сравнение не так однозначно: у каждой модели есть свои сильные стороны.

SDXL особенно силён в:

- Пиксель-арт и ретро‑стили

- Живописная и экспрессионистская эстетика

- Аниме и иллюстративные стили

- Стабильное соблюдение выбранного стиля

Сильные стороны Flux:

- Фотореалистичные изображения

- Естественное освещение и текстуры

- Реалистичные оттенки кожи и проработка тканей

- Кинематографичные композиции

Тестовый промпт: "pixel art of a dragon, 8 bit graphics, retro video game style"

- SDXL выдал аутентичную пиксельную графику

- Flux сгенерировал слишком гладкие, «отполированные» версии, из‑за чего потерялась ретро‑эстетика

В то же время для реалистичных портретов Flux обеспечивает заметно более естественные текстуры кожи и освещение.

- Экосистема и инструменты

Победитель: SDXL (пока что)

18‑месячный гандикап SDXL означает более зрелую экосистему:

| Ресурс | SDXL | Flux |

| Модели LoRA | Тысячи | Сотни |

| ControlNet | Полная поддержка | Частичная / на этапе развития |

| Инструменты обучения | Зрелые | В разработке |

| Ноды ComfyUI | Широкий набор | Активно расширяется |

| Документация | Подробная | Ограниченная При этом экосистема Flux растёт очень быстро. Она активно развивается, и многие повседневные рабочие сценарии уже можно реализовать сегодня. Тем не менее SDXL всё ещё сохраняет преимущество за счёт более глубокой и зрелой экосистемы инструментов. |

Краткое сравнение возможностей

| Характеристика | Flux.1 [dev] | SDXL |

| Рендер текста | ★★★★★ | ★★☆☆☆ |

| Анатомия рук | ★★★★☆ | ★★★☆☆ |

| Следование промпту | ★★★★★ | ★★★☆☆ |

| Скорость генерации | ★★☆☆☆ | ★★★★★ |

| Эффективность по VRAM | ★★☆☆☆ | ★★★★☆ |

| Фотореализм | ★★★★★ | ★★★★☆ |

| Художественные стили | ★★★☆☆ | ★★★★★ |

| Зрелость экосистемы | ★★★☆☆ | ★★★★★ |

| Negative prompts | ✗ | ✓ |

| Коммерческое использование | Ограничено | Зависит от модели |

Рекомендации по сценариям использования

Выбирайте Flux, если вам нужно:

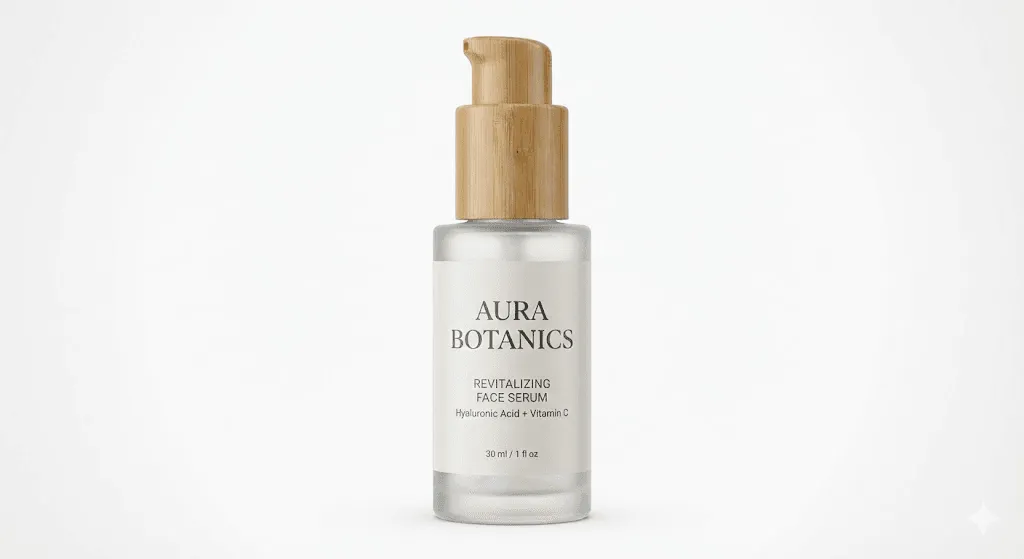

- Продуктовая съёмка и e‑commerceКорректный рендер текста на упаковкеФотореалистичные изображения товаровЕдиное и стабильное освещение

- Создание контента для соцсетейГенерация мемов с читаемым текстомФотографии в стиле инфлюенсеровБыстрая визуализация идей и концептов

- Архитектурная визуализацияЧёткие линии и точная геометрияРеалистичные материалы и освещениеСложные сцены с продуманной композицией

- Портреты и персонажиЕстественные текстуры кожиКорректная анатомия рук и пальцевВыразительные позы и мимика

Выбирайте SDXL, если:

- Цифровое искусство и иллюстрацияОпределённые художественные стили (аниме, пиксель-арт, живописные стили)Консистентность персонажей с помощью LoRAКреативные эксперименты

- Массовая генерацияКонтент в больших объёмахПакетные рабочие процессыБыстрое прототипированиеПроекты с жёсткими сроками

- Ограниченные аппаратные ресурсыСистемы с 8 ГБ VRAMРабота на ноутбукахБюджетные сценарии внедрения

- Продвинутые сценарии управленияControlNet для контроля поз и композицииInpainting и outpaintingСложные пайплайны с несколькими моделями

Технический разбор: различия в архитектуре

Чтобы понять, почему эти модели работают по‑разному, нужно разобраться в их архитектуре.

Архитектура SDXL

SDXL использует классическую диффузионную архитектуру на базе U-Net со следующими характеристиками:

- Два текстовых энкодера (OpenCLIP ViT G + CLIP ViT L)

- Механизмы cross-attention

- Опциональная refiner-модель для усиления детализации

- Операции в латентном пространстве с разрешением 128×128

Архитектура Flux

Flux предлагает гибридный подход:

- Мультимодальная диффузионная трансформерная архитектура (MMDiT)

- Ротационные позиционные эмбеддинги (RoPE)

- Параллельные слои внимания

- Обучение с целевой функцией flow matching

- Текстовый энкодер T5 для более глубокого понимания языка

Особую роль играет энкодер T5 — та же технология лежит в основе языковых моделей Google. Благодаря этому Flux лучше понимает сложные промпты и заметно точнее работает с текстом на изображениях.

Почему Flux не поддерживает негативные промпты

Традиционные диффузионные модели вроде SDXL используют classifier-free guidance — этот подход изначально поддерживает негативные промпты, позволяя модели целенаправленно избегать нежелательных результатов.

Flux использует иную методологию обучения (flow matching) и не применяет negative conditioning. Это упрощает процесс генерации и улучшает следование промпту, но при этом вы не можете явно указать, чего модель должна избегать.

Обходной приём: формулируйте позитивные промпты максимально конкретно. Вместо «красивая женщина, негатив: уродливая, деформированная» лучше использовать «красивая женщина с чистой кожей, пропорциональными чертами лица и естественным выражением».

Советы по оптимизации производительности

Оптимизация производительности Flux

- Используйте квантизацию FP8 или NF4, чтобы снизить потребление VRAM без заметной потери качества

- Для черновиков выбирайте Flux [schnell], а для финальных изображений — [dev]

- Включайте xformers или Flash Attention для более эффективной работы с памятью

- Оптимальные настройки: 4–8 шагов для [schnell] и 20–28 шагов для [dev]

Оптимизация производительности SDXL

- Используйте варианты SDXL Turbo или Lightning для более быстрой генерации

- На этапах черновиков пропускайте refiner

- Во время итераций снижайте разрешение, а финальные результаты апскейльте

- Группируйте похожие промпты в батчи, чтобы эффективно использовать кэширование

Переход с SDXL на Flux

Если вы планируете переход, вот практический гайд по миграции:

Перевод промптов

Промпты для SDXL не всегда переносятся один к одному. Вот ключевые различия:

| Подход SDXL | Подход Flux |

| Негативные промпты для повышения качества | Подробные позитивные описания |

| Ключевые слова стиля (например, «masterpiece», «best quality») | Часто не требуются |

| Взвешенный синтаксис (слово:1.5) | Не поддерживается в большинстве реализаций |

| Промпты, оптимизированные под токены | Естественный язык работает лучше |

Адаптация рабочих процессов

- Начинайте с простых запросов: Flux лучше понимает естественный язык

- Откажитесь от негативных промптов — формулируйте нужные ограничения и требования в позитивной форме

- Закладывайте больше времени на генерацию и учитывайте это в рабочем процессе

- Будьте готовы к пробелам в экосистеме: часть LoRA и инструментов пока может быть недоступна

Взгляд в будущее: куда движутся эти модели?

SDXL

Stability AI продолжает развивать линейку Stable Diffusion: SD3 и SD3.5 принесли заметные улучшения в рендере текста (хотя до уровня Flux они всё ещё не дотягивают). При этом экосистема SDXL останется актуальной ещё много лет благодаря:

- Огромная существующая библиотека ресурсов

- Низкий порог по требованиям к железу

- Широкое распространение в enterprise‑сегменте

Flux

Black Forest Labs активно развивает Flux — ожидаются улучшения в:

- Оптимизация скорости генерации

- Инструменты и аналоги ControlNet

- Фреймворки для обучения и тонкой настройки

- Варианты коммерческого лицензирования

Мы ожидаем, что разрыв в зрелости экосистемы существенно сократится к концу 2025 года.

Часто задаваемые вопросы

Flux лучше, чем SDXL?

Всё зависит от вашего сценария. Flux даёт более высокое качество для фотореалистичных изображений, корректного рендеринга текста и сложных промптов. SDXL по‑прежнему выигрывает в скорости, стилизованной графике и задачах, где нужны ControlNet или активное использование LoRA.

Можно ли запустить Flux на 8 ГБ VRAM?

Технически — да, при использовании квантованных моделей (NF4), но придётся смириться с компромиссами по скорости и, возможно, по качеству. Для комфортной работы с Flux рекомендуется видеокарта с 12 ГБ VRAM и больше.

Поддерживает ли Flux LoRA-модели?

Да, но экосистема заметно меньше, чем у SDXL. Специализированные LoRA для Flux постепенно появляются, а некоторые концепты LoRA из SDXL можно адаптировать, однако по разнообразию он пока уступает.

Почему Flux не поддерживает негативные промпты?

Flux обучается с использованием flow matching и не поддерживает негативное кондиционирование. Поэтому лучше компенсировать это максимально подробными позитивными промптами — чётко описывайте, что именно вы хотите получить на изображении.

Какая модель лучше подходит для аниме и иллюстраций?

Сегодня SDXL лидирует в стилизованном контенте. Его зрелая экосистема включает тысячи LoRA и чекпойнтов, ориентированных на аниме, тогда как Flux тяготеет к фотореализму даже при использовании стилевых промптов.

Можно ли использовать Flux в коммерческих проектах?

- Flux [schnell]: Да (лицензия Apache 2.0)

- Flux [dev]: Только для некоммерческого использования

- Flux [pro]: Да, через платный API

Сколько времени занимает генерация изображения в Flux?

На RTX 4090: примерно 45–60 секунд на изображение 1024×1024 при 20 шагах для Flux [dev]. Flux [schnell] справляется за 8–10 секунд при 4 шагах.

Стоит ли переходить с SDXL на Flux?

Стоит перейти, если:

- Для вашей работы важна корректная отрисовка текста

- В приоритете фотореализм

- У вас 12+ ГБ VRAM

- Вы готовы мириться с более медленной генерацией

Оставайтесь с SDXL, если:

- Вам критически важна скорость

- Вы активно используете LoRA и ControlNet

- Вы работаете со стилизованной графикой

- У вас ограниченный объём VRAM

Итоги

Выбор между Flux и SDXL — это не вопрос «какая модель лучше», а вопрос, какая из них лучше подойдёт именно вам.

Flux — это новое поколение технологий генерации изображений с прорывными улучшениями в рендеринге текста, следовании промпту и анатомической точности. Это выбор для фотореалистичных задач, профессиональных сценариев, где важна максимальная точность, и для тех, кто расширяет границы возможностей генерации изображений ИИ.

SDXL по‑прежнему остаётся мощным инструментом для креативных задач: высокая скорость, зрелая экосистема и отличная производительность даже на не самом топовом железе. Это оптимальный выбор для массовой генерации, стилизованного арта и рабочих процессов, где важны продвинутые инструменты управления.

Для многих профессионалов ответ — не «или/или», а «и то и другое». Flux лучше подходит для финальных hero‑изображений и контента с большим объёмом текста, а SDXL — для быстрой итерации, стилизованных работ и сложной управляемой генерации.

Рынок генерации изображений с помощью ИИ развивается стремительно. Главное — понимать сильные стороны каждого инструмента и выбирать тот, который лучше всего подходит под ваши задачи.