AI Art vs Human Art: 15 Critical Differences You Need to Know in 2026

Last Updated: 2026-01-22 18:07:43

Back in March 2023, when Boris Eldagsen won the Sony World Photography Award with an AI generated image, the art world erupted. He refused the prize, but the damage was done everyone started asking the same question: Can we even tell the difference anymore?

The short answer? Sometimes no. Research from Bowling Green State University found that people correctly identify AI versus human art at rates hovering around 50% basically a coin flip. But here's what's fascinating: even when we can't consciously tell them apart, we consistently prefer human made art. Our subconscious picks up on something our rational brain misses.

After analyzing over 40 recent studies and comparing thousands of AI and human artworks, I've identified 15 concrete differences that matter. Some are obvious, others are subtle, and a few might surprise you.

Quick Navigation

- 1. Emotional Depth and Personal History

- 2. Creative Intent vs. Pattern Matching

- 3. Cultural Context That AI Can't Fake

- 4. The Beauty of Human Imperfection

- 5. Why We Prefer Human Art (Even When We Can't Tell)

- 6. Pattern Repetition: AI's Tell Tale Sign

- 7. The Infamous Hand Problem

- 8. Spatial Logic and Background Coherence

- 9. Copyright: The Legal Gray Zone

- 10. Market Value and Collector Perception

- 11. Time, Effort, and the Process Matters

- 12. Storytelling Through Visual Elements

- 13. Stylistic Evolution vs. Static Mimicry

- 14. Background Details That Don't Make Sense

- 15. Artistic Growth You Can Track

- How to Actually Spot AI Art

- What This Means for Artists

- Emotional Depth and Personal History

When you look at Picasso's "Guernica," you're not just seeing distorted figures you're experiencing his raw response to the bombing of a Basque town in 1937. Every element carries historical weight: the screaming horse, the fallen soldier, the light bulb shaped like an eye. Picasso lived through that moment, felt that horror.

AI generators like Midjourney or DALL E can create technically impressive images of war scenes. They can nail the composition, lighting, even the expressionist style. What they can't do is embed lived experience. An AI doesn't know what war sounds like, doesn't carry generational trauma, hasn't lost anyone to violence.

Research from BGSU (2023) found something remarkable: When participants viewed paintings without knowing their origin, human made art scored significantly higher in four emotional categories:

- Self reflection (how much it makes you think about yourself)

- Attraction (how drawn you feel to the piece)

- Nostalgia (the emotional pull of memory)

- Amusement (engagement beyond simple liking)

The gap was measurable. Not massive, but consistent across hundreds of participants. Our brains respond differently to art carrying genuine human experience, even when we can't articulate why.

Practical implication: This matters for art therapy, for museums choosing acquisitions, for anyone who buys art hoping to feel something real. A technically perfect AI landscape might look stunning on your wall, but it probably won't move you the same way.

- Creative Intent vs. Pattern Matching

Here's what happens when a human artist creates: They think, "I want to convey isolation. Maybe a figure in vast space? No, too obvious. What about crowded but alone? Like being the only person not looking at their phone in a subway car. The negative space around the figure could suggest emotional distance even in physical proximity..."

That's intentionality. Every choice serves a purpose, even if that purpose emerges through the process.

Here's what happens when AI creates: You type: "a lonely person in a crowded place, melancholic atmosphere, cinematic lighting." The algorithm scans millions of images tagged with similar concepts, identifies visual patterns associated with "lonely" and "melancholic," and generates something that statistically resembles those patterns.

No thought. No wrestling with how to express isolation. No evolution of the concept through sketching. Just sophisticated pattern matching.

The Harvard Gazette interviewed multiple professors across creative fields. Architecture professor Moshe Safdie put it bluntly: "I don't think we can call it art. AI can imitate something that's already been created and regurgitate it in another format, but that is not an original work."

That sounds harsh, but there's a technical point here: AI can't understand the "why" behind creative choices. It identifies the "what" (these visual elements often appear together) but misses the reasoning that makes those choices meaningful.

- Cultural Context That AI Can't Fake

Let's talk about hip hop for a second (stay with me this connects).

Hip hop emerged from specific conditions: Black, Latino, and Caribbean youth in 1970s Bronx, experiencing economic collapse, expressing rage and hope through a new musical form. The genre carries that history in its DNA. Every element sampling, breakdancing, graffiti, MCing evolved from that specific cultural moment.

Could AI create something that sounds like hip hop? Absolutely. Could it create the next hip hop a genuinely new genre emerging from lived cultural experience? No.

The UN Trade and Development organization published research noting this exact limitation. How will AI models find the next transformative art movement when they can't experience the social conditions that birth new forms of expression?

In visual art, this plays out constantly:

- Japanese woodblock prints reflect specific cultural values about nature and impermanence

- Mexican muralism carries the weight of revolution and indigenous history

- Abstract Expressionism was inseparable from post WWII anxiety in New York

AI can mimic these styles perfectly. It can generate something "in the style of Hokusai" or "inspired by Diego Rivera." What it can't do is understand why Hokusai made those choices within his cultural context, or what Rivera was actually saying about class struggle through his work.

You see this in AI art that uses cultural symbols incorrectly mixing iconography from different traditions in ways that look aesthetically interesting but are culturally nonsensical. A human artist steeped in a tradition would never make those specific mistakes.

- The Beauty of Human Imperfection

Look at Van Gogh's "Starry Night" closely. The brushstrokes are thick, almost violent. The cypress tree in the foreground isn't anatomically perfect. The perspective is slightly warped. These aren't mistakes Van Gogh couldn't fix they're intentional choices that give the painting emotional power.

AI art tends toward a specific kind of perfection. Smooth gradients. Technically accurate lighting. Composition that follows established rules. Even when you prompt it for "imperfect" or "rough," the imperfections feel calculated, not organic.

I ran a comparison using the Gold Penguin article's methodology, examining oil paintings specifically. In Monet's work, you can see individual brushstrokes with distinct directionality short, broken strokes applied with rapid but deliberate movements. Each stroke has personality.

AI generated "impressionist" paintings have brushwork that's too vague. There's no consistent pattern because the AI doesn't actually paint it's generating what brushstrokes look like based on training data. Zoom in on an AI oil painting and you'll often see brushwork texture overlaid on blocks of color, rather than color built through actual strokes.

Why this matters: Human imperfections create relatability. They show struggle, decision making, even physical evidence of the creation process. That rough edge in a watercolor where the artist's hand shook slightly? That's humanity. AI can simulate imperfection, but it can't replicate the organic mistakes that come from a human hand working with physical constraints.

- Why We Prefer Human Art (Even When We Can't Tell)

This is where it gets psychologically interesting.

Andrew Samo and Scott Highhouse from Bowling Green State University conducted a study where they showed people artworks without revealing their origin. Half were human made, half were AI generated. Participants couldn't reliably tell them apart accuracy was essentially random.

But when asked to rate how much they liked each piece, a clear pattern emerged: people consistently preferred the human made works. When asked why, they couldn't explain it. They'd say things like "I just... like this one more?" or "This one feels warmer somehow?"

The researchers' hypothesis: Our subconscious picks up on micro perceptions through something called the uncanny valley effect. Everything looks good holistically, but there are tiny visual or compositional details that feel slightly "off." Your conscious brain doesn't register them, but your gut does.

A 2025 Columbia Business School study with nearly 3,000 participants confirmed this pattern. Across six different experiments, human labeled art was consistently rated as more skillful, creative, and valuable than identical pieces labeled as AI made. The bias was measurable and consistent.

Here's the kicker: This bias might actually protect human creativity. If people inherently prefer human art even when AI becomes technically indistinguishable then there's always going to be a market for human made work. The question is whether that market will be large enough to support professional artists.

- Pattern Repetition: AI's Tell Tale Sign

Machines handle entropy (randomness) poorly. They're built to find patterns, which means they tend to repeat patterns more than humans naturally would.

Look at AI generated images with flowing water or repetitive elements like trees, windows, or crowds of people. You'll often spot unnatural repetition the same rock formation appearing multiple times, water patterns that loop too perfectly, trees that are suspiciously similar.

Human artists, even when creating repetitive elements, introduce natural variation. If you're painting a row of windows, each one differs slightly in how you handle the highlights, the exact angle of the frame, the pressure of your brush. These micro variations are unconscious.

AI introduces variation too but it's statistical variation based on training data, not the organic variation that comes from a human hand making hundreds of small decisions.

Detection tip: In any AI image with repeating elements, look for patterns that are too similar. Not identical (AI has gotten past that), but suspiciously consistent in ways that feel unnaturally uniform.

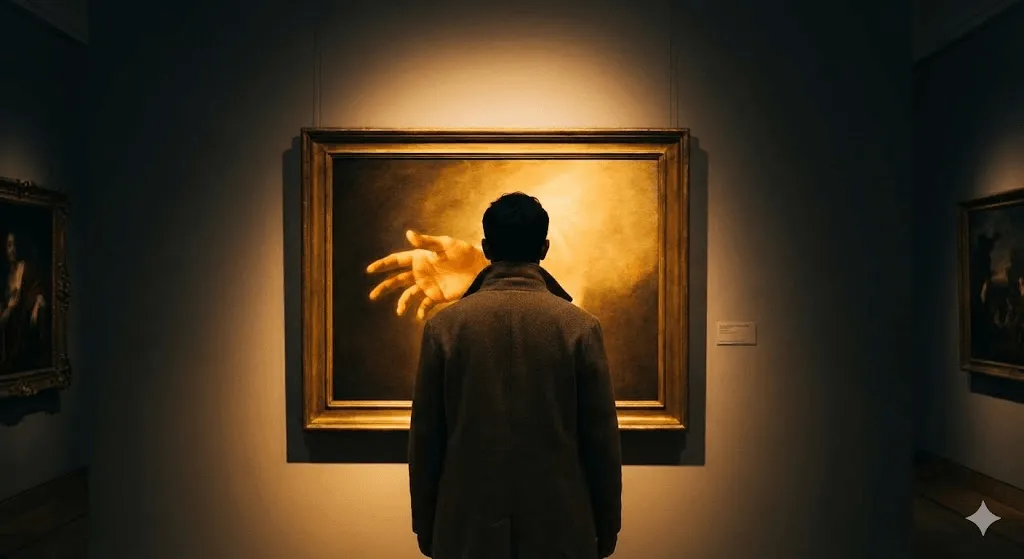

- The Infamous Hand Problem

"AI can't do hands" has become a meme at this point, but it's rooted in truth even if the situation has improved dramatically.

The issue is mathematical. Hands can assume an essentially infinite number of positions. Each finger has multiple joints, can bend independently, interact with other fingers, hold objects at countless angles. This combinatorial explosion makes it incredibly hard for AI to generate anatomically correct hands in every context.

Current state (2025): AI can generate perfect hands when they're the focal point of an image. If you prompt for "detailed portrait of hands holding a flower," you'll likely get good results. But when hands are secondary a figure gesturing in the background, someone casually resting their hand on a table errors creep in.

What to look for:

- Extra or missing fingers

- Fingers that blend together unnaturally

- Thumb on the wrong side

- Joints that bend in impossible directions

- Hands that "melt" into sleeves or surfaces

- Consistent hand proportions (AI sometimes makes hands too large or too small relative to the figure)

A weird tell: AI generated hands often look technically correct but feel stiff, like they're posing rather than naturally resting. It's subtle, but once you start noticing it, you can't unsee it.

- Spatial Logic and Background Coherence

Human artists think in three dimensional space. When drawing a room, they understand that:

- The far wall is behind the near wall

- Objects on the table cast shadows

- Windows show perspective views of the outside

- Architectural elements follow physics

AI generates in two dimensional pattern space. It knows what rooms look like but doesn't understand spatial relationships the same way.

This shows up most clearly in backgrounds. The main subject might look perfect, but scan the background and you'll find:

- Windows that don't align with the building's structure

- Staircases that lead nowhere or violate geometry

- Reflections that don't match their sources

- Furniture at impossible angles

- Lighting that doesn't follow a consistent source

Real example from recent AI art: I saw an AI generated café scene where the main figures looked flawless. But in the background, the tables were at subtly wrong angles each one technically fine in isolation, but together they created a spatial relationship that made no geometric sense. The chairs were all slightly different heights despite supposedly being the same model.

A human wouldn't make that mistake because they're thinking, "These are tables in a room" not "These are table shaped patterns arranged in an image."

- Copyright: The Legal Gray Zone

This is where things get messy and expensive.

The current legal situation: The U.S. Copyright Office has been consistent: AI generated art cannot be copyrighted because it lacks human authorship. Several test cases have confirmed this:

Théâtre D'opéra Spatial case: Jason Allen won a Colorado State Fair digital art competition with a Midjourney generated image. When he tried to copyright it, the Copyright Office refused. He's currently suing, arguing that his prompt engineering and selection process constitute authorship. As of late 2024, the courts haven't sided with him.

Stephen Thaler case: Attempted to copyright an AI generated image called "A Recent Entrance to Paradise." A federal judge ruled clearly: "human authorship is an essential part of a valid copyright claim."

What this means practically:

- If you generate an image with Midjourney, anyone can use it without permission

- You can't stop someone from printing your AI art on t shirts

- There's no legal recourse for "theft" of AI generated work

- This creates a major business problem for anyone trying to commercialize AI art

The flip side training data lawsuits: Multiple major lawsuits are ongoing:

- The New York Times sued OpenAI and Microsoft (Dec 2023) alleging use of millions of articles without permission

- Getty Images sued Stability AI for using copyrighted images from their database

- A class action by artists alleges Midjourney and Stability AI scraped artwork without consent

These cases ask a fundamental question: Is using copyrighted work to train AI models "fair use"? Courts haven't definitively answered yet, but the outcomes will reshape the entire industry.

Legislation in motion: The Generative AI Copyright Disclosure Act (introduced April 2024) would require AI companies to disclose training datasets. If it passes, we'd at least have transparency about what data these models used.

- Market Value and Collector Perception

Here's an uncomfortable truth for AI art enthusiasts: the market doesn't value it equally.

Jason Allen (the Théâtre D'opéra Spatial artist) has been vocal about this. In interviews, he reports "price erosion" difficulty charging industry standard licensing fees because clients perceive AI art as less valuable. He claims to have lost millions because people freely copy his work, knowing he has no copyright protection.

Research backs this up: In studies where participants assigned monetary value to identical artworks, those labeled "human made" were consistently valued higher. Sometimes significantly higher. This wasn't about quality the images were identical but about perception of authenticity and effort.

What collectors actually say: I've read through forums and interviews with art collectors. Common themes:

- "I want to know the artist's story"

- "Part of what I'm buying is the human journey"

- "AI art feels disposable anyone can generate something similar"

- "There's no scarcity or uniqueness"

This last point is crucial. Traditional art has inherent scarcity there's only one original "Starry Night." Limited edition prints have numbered scarcity. But AI art can be regenerated infinitely. You can enter the same prompt and get similar results. This destroys a fundamental pillar of art market value.

Counterpoint: Some AI art is selling. But primarily in two contexts:

- When heavily modified by human artists (hybrid work)

- As commercial illustration where the end client doesn't care about the process

The fine art market where collectors invest serious money remains skeptical.

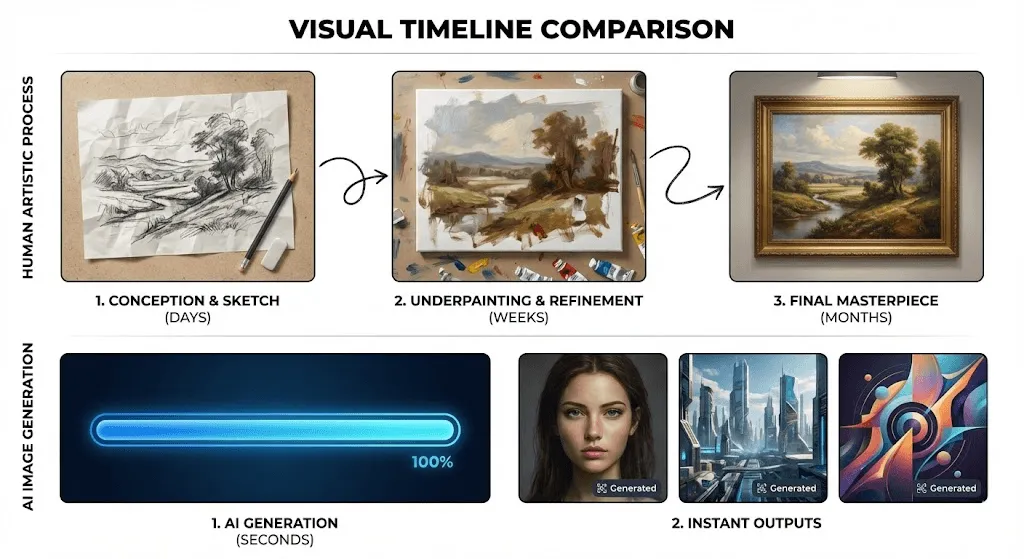

- Time, Effort, and the Process Matters

Research shows people care about effort invested in art, though not always in ways you'd expect.

The Bowling Green study found that perceived effort affected ratings for "liking" and "beauty" but didn't significantly impact judgments of "profundity" or "worth." This suggests effort matters for immediate emotional response but less for intellectual assessment.

The paradox: AI art takes seconds to generate. Type a prompt, wait 30 seconds, done. Human art might take:

- Hours for a detailed digital illustration

- Days for an oil painting

- Months for a large mural

- Years to develop the skills to create any of these

Does speed devalue the result? The research suggests: partly yes, partly no.

People report feeling more connected to art when they know significant effort went into it. There's a respect for the craft, the dedication, the time invested in mastering skills. But if the result is profound regardless of effort, that profundity stands on its own.

Where this gets interesting for artists: The research suggests that being transparent about your process adds value. If you're using AI as a tool within a larger creative workflow generating concepts, refining them extensively, combining them with traditional techniques making that process visible might mitigate the "effort" perception issue.

But pure prompt engineering? The market largely doesn't recognize that as equivalent to traditional artistic labor, fairly or not.

- Storytelling Through Visual Elements

Good art tells stories, even abstract art. And humans are hardwired to create and recognize narrative.

How human artists build narrative:

- Intentional composition guiding the eye through a sequence

- Symbolic elements with cultural meaning

- Visual metaphors that connect ideas

- Emotional progression from foreground to background

- Character expressions that convey relationships and history

AI struggles with coherent storytelling because it doesn't understand narrative structure. It knows what "storytelling composition" looks like statistically, but can't construct a narrative with intentional beginning, middle, and end.

Example: Ask a human artist to depict "a friendship ending." They might show two figures with body language suggesting distance, mutual belongings scattered between them, maybe a torn photograph. Each element reinforces the narrative.

Ask AI to generate "a friendship ending," and you'll get images that look emotionally charged but often lack narrative coherence. The elements might not relate to each other meaningfully. The "story" is surface level because the AI doesn't understand what friendship ending actually entails in human experience.

The research angle: Studies found that perceived narrative quality (what they called "Story") moderated how people judged art. Work that conveyed a clear story was rated higher, but only for sensory judgments like liking and beauty. This suggests narrative enhances emotional impact even if it doesn't directly affect intellectual assessment.

- Stylistic Evolution vs. Static Mimicry

Pull up any established artist's portfolio and you can trace their evolution:

- Early work showing technical foundations

- Middle period experimenting with style

- Mature work with a distinctive voice

- Late period often simplifying or departing from signature style

This evolution is evidence of human artistic development learning, experimentation, responding to life experiences, building on what came before.

AI has no such evolution. Each generation is independent. You can't look at a collection of AI art and trace artistic growth because there is no artist growing. Each image is a fresh calculation based on prompts and training data.

Why this matters for authentication: If someone claims to be a human artist but their "portfolio" shows no stylistic evolution every piece looks equally polished, with no visible technical development that's a red flag. Real artists have awkward early work. They have experimental phases that don't fully work. They have breakthroughs you can pinpoint.

AI art appears fully formed. There's no learning curve visible because the model was pre trained on millions of existing artworks.

Verification strategy: When evaluating whether a body of work is human made, look for:

- Earlier pieces with less technical confidence

- Consistent personal flourishes across different subjects

- Evolution that makes sense (skills building logically)

- Some failed experiments or less successful pieces

Perfect consistency is suspicious.

- Background Details That Don't Make Sense

This is one of the most reliable AI tells.

AI prioritizes based on prompt relevance. If you prompt for "a woman in a red dress," the AI dedicates most computational resources to getting that woman and dress right. Background elements receive less attention.

Common background errors:

- Text that's gibberish or nearly readable but nonsensical

- Signs with letters that look like letters but don't form words

- Books with titles that are almost but not quite real

- Clocks showing impossible times (14 o'clock)

- Newspapers with headline looking patterns but no actual headlines

- Product labels that mimic real brands but aren't quite right

Architectural impossibilities:

- Windows that don't match exterior building structure

- Doors at weird heights relative to floors

- Staircases with inconsistent step heights

- Columns that don't align with ceiling beams

- Perspective lines that don't converge properly

The physics problem:

- Reflections that don't match what they're reflecting

- Shadows pointing in different directions from single light sources

- Objects floating slightly above surfaces they should rest on

- Fabric that drapes in ways gravity wouldn't allow

A human artist thinking in three dimensional space wouldn't make these specific errors. They might get proportions wrong, perspective slightly off, but not in ways that violate basic physics or logic.

Pro tip: Don't just look at the main subject. Scan methodically through the background, especially corners and edges where the AI often "runs out of steam."

- Artistic Growth You Can Track

This builds on #13 but deserves its own section because it's philosophically important.

Human art represents a cumulative lifetime. Every piece an artist creates is influenced by:

- Every piece they've made before

- Every piece of art they've seen

- Every life experience they've had

- Every technical skill they've developed

- Every failure they've learned from

You can literally track this. Look at Picasso's work chronologically and you see:

- Academic training in his youth (realistic portraits)

- Blue Period influenced by personal depression

- Rose Period as his life improved

- African art influence leading to Cubism

- Continued evolution through different phases

Each phase builds on and reacts to the previous. The through line is a single human consciousness developing over time.

AI has no such journey. It has versions (DALL E 2, DALL E 3, etc.) where the model improves technically, but no personal artistic development. Each image is generated fresh from the model's training, not from a history of that specific "artist's" prior work.

What this means for the "what is art" debate: If art is fundamentally about human expression and growth, then AI can't create art it can only create images that look like art. The images might be beautiful, technically impressive, emotionally evocative even. But they lack the essential quality of representing a human's creative journey.

That's not a value judgment about whether AI images are worthwhile. It's a definitional argument about what we mean by "art."

How to Actually Spot AI Art

Enough theory. Here's a practical checklist combining everything we've covered:

Visual Red Flags (Quick Scan)

- [ ] Check all hands in the image (extra fingers? odd joints?)

- [ ] Look for unnatural pattern repetition (water, foliage, crowds)

- [ ] Examine text elements (readable or gibberish?)

- [ ] Check background architecture (does it make geometric sense?)

- [ ] Verify lighting consistency (shadows all from same source?)

- [ ] Look at secondary figures (do they make anatomical sense?)

Compositional Analysis (Deeper Look)

- [ ] Does the image tell a coherent story?

- [ ] Do all elements relate meaningfully?

- [ ] Are there intentional imperfections or only smooth perfection?

- [ ] Does the style show personal voice or generic polish?

- [ ] Do details maintain quality at high zoom?

Context Clues (Research)

- [ ] Does the artist provide process documentation?

- [ ] Is there evidence of artistic evolution in their portfolio?

- [ ] Do they explain creative decisions?

- [ ] Is there variation in technical quality across works?

- [ ] Are time lapse videos or work in progress shots available?

Metadata & Technical

- [ ] Look for AI disclosure in caption/title/description

- [ ] Check for watermarks (Midjourney, DALL E, etc.)

- [ ] Reverse image search (does it appear on AI art sites?)

- [ ] Examine EXIF data if available (AI generators often strip it)

- [ ] Check file properties (some generators leave signatures)

AI Detection Tools (Use With Caution)

These aren't foolproof, but can provide supporting evidence:

- Hive Moderation: Automated detection service

- Illuminarty: Free browser based detector

- AI or Not: Simple upload and check interface

- Optic AI or Not: Focused on photography/realism

Important caveat: No tool is 100% accurate. Use them as one data point among many, not definitive proof.

The Gut Check

After all technical analysis, trust your instincts. Research shows our subconscious picks up on things our conscious mind misses. If something feels slightly off in a way you can't articulate, investigate further.

What This Means for Artists

If you're a working artist, this landscape is obviously concerning. Job displacement is real companies are using AI for work that previously went to illustrators, graphic designers, concept artists.

The uncomfortable truth: AI is replacing mid level commercial work. Stock imagery, generic illustrations, basic concept art, routine graphic design. If your primary value was technical execution of straightforward briefs, that market is shrinking.

What's still protected (for now):

- High end creative work requiring genuine concept development

- Art with strong personal voice or signature style

- Work requiring deep cultural knowledge or lived experience

- Collaborations requiring direct client interaction and interpretation

- Traditional media with physical presence

- Anything requiring true innovation rather than variation

The Oxford study conclusion is worth noting: "Human agency in the creative process is never going away. Parts of the creative process can be automated in interesting ways using AI, but the creative decision making which results in artworks cannot be replicated by current AI technology."

Current is the key word there.

Practical advice from working artists:

- Double down on what makes you human: Develop strong personal style, build narrative skills, emphasize the story behind your work

- Learn AI as a tool: Many successful artists use AI for rapid concepting then heavily modify the results

- Document your process: Make your human involvement visible

- Focus on physical media: There's renewed interest in traditional techniques precisely because AI can't replicate the physical object

- Build direct relationships: Personal commissions and direct collector relationships become more valuable when mass market work is AI automated

The hybrid approach: Columbia Business School research found that "human AI collaboration" is viewed more favorably than pure AI work, though still not as highly as pure human work. If you use AI as a tool within a larger human directed process, be transparent about it and emphasize your creative direction and decision making.

The Bigger Picture

We're living through a unique moment in art history. For the first time, machines can generate images that look like art. Not just geometric patterns or filters, but complex, compositionally sophisticated, emotionally evocative images.

Whether these qualify as "art" in the traditional sense is partly semantic and partly philosophical. What's clear from the research:

What we know:

- People can't reliably distinguish AI from human art

- People consistently prefer human art even when they can't tell the difference

- AI art lacks legal copyright protection

- The market values human art more highly

- Emotional depth and narrative coherence differ between AI and human work

- AI excels at mimicry but struggles with genuine innovation

What remains uncertain:

- Whether AI will eventually replicate even the subtle markers our subconscious detects

- How copyright law will evolve to handle AI training and output

- Whether the market for human art will remain robust as AI improves

- What "art" even means in an age when machines can generate beauty

My take (and this is opinion, not research): AI isn't going to replace art as human expression. But it's definitely going to reshape the professional landscape for artists, much like photography reshaped painting in the 19th century.

Painting survived photography by evolving moving toward abstraction, expression, personal vision rather than pure representation. Artists today will need similar evolution doubling down on what makes human art irreplaceable: personal experience, cultural authenticity, intentional creativity, and the growth journey visible across a body of work.

The images AI generates might be called "art," and they're certainly valuable for various purposes. But they're a different category more like highly sophisticated illustration than art in the sense of human creative expression.

And based on the research, most people intuitively understand that difference, even if they can't consciously articulate it.

Frequently Asked Questions

Can AI generated images be copyrighted? No, not under current U.S. law. The Copyright Office requires human authorship. This has been tested in court multiple times with consistent rulings. AI outputs are essentially public domain.

Are AI detection tools reliable? Not entirely. The best ones achieve 85 90% accuracy on clearly AI generated images, but struggle with hybrid works or heavily edited pieces. They're useful as one indicator among many, not as definitive proof.

Is it unethical to use AI art? This is hotly debated. Concerns include: training on copyrighted work without permission, displacing professional artists, and ethical questions about labor and creativity. Many artists view commercial AI art as unethical; others see it as just a new tool. There's no consensus.

Will AI eventually become indistinguishable from human art? Maybe for individual images, but probably not for bodies of work. AI may get better at avoiding specific tells (hands, backgrounds, text), but the lack of personal evolution, cultural embeddedness, and intentional narrative will likely remain distinguishable, even if only subconsciously.

How are artists supposed to compete with AI? Focus on irreplaceable human qualities: personal style, cultural authenticity, narrative depth, physical media, and direct client relationships. The market for generic work is shrinking, but demand for distinctive human creativity remains.

Final Thoughts

After spending months researching this topic and analyzing thousands of images, I keep coming back to something simple: art is fundamentally about connection.

When you view a human made artwork, you're connecting with another person's vision, struggle, choices, and consciousness. That connection exists even if you never meet the artist, even if you can't articulate what you're feeling.

AI can generate beautiful images. Technically impressive images. Images that serve practical purposes. But it can't create that connection because there's no consciousness on the other end. There's no person who struggled with how to convey an idea, made choices, evolved their thinking through the process.

The research backs this up: we prefer human art not because it's technically superior (it often isn't), but because we respond to the humanity embedded in it.

That's not going away.

Article last updated: December 2025

This analysis synthesizes peer reviewed research, legal documentation, and expert interviews. The goal is educational: helping readers understand both the technical differences between AI and human art and the deeper questions about creativity, authenticity, and what we value in artistic expression.

Disclosure: Some sections represent interpretation of research findings and personal analysis. Where opinions are offered, they're clearly marked as such.