AI 图像生成原理详解:带你逐步了解从输入提示词到最终成图的全过程

最后更新: 2026-01-22 18:08:25

输入提示词后,AI 究竟是如何作画的?(带您深入浅出地了解技术原理)

这种体验想必您已不再陌生:只需在 Midjourney 或 DALL-E 中输入“一只戴着巫师帽的猫,油画风格”并按下回车,短短 30 秒内,一张此前从未存在过的图像便会跃然纸上,整个过程仿佛充满魔力。

这并非神奇的魔法,而是复杂数学运算的结晶。深入理解其技术原理不仅能带给您启发,更能助您进阶为工具使用高手:通过洞察提示词生效的底层逻辑,您将告别盲目摸索,实现从随机“猜测”到精准“创作”的质变。

接下来,我们将深入浅出地剖析其背后的技术逻辑。本文虽不涉及深奥的学术研究,但足以让您透彻理解 AI 绘图的核心运行机制,真正洞察其底层的技术实现。

内容要点(30秒速览):

AI 绘图技术首先将您的提示词转化为数值化的文本嵌入,随后在压缩的“潜空间”内以随机噪声为起点,凭借扩散模型在提示词的实时引导下逐步消除噪声,并最终解码还原为像素图像。通过灵活配置 CFG(引导系数)、采样步数及种子值等关键参数,您可以精准掌控画面对提示词的还原程度,从而确保生成结果的稳定性与一致性。

深入剖析:AI 图像生成技术助力解决的两大核心难题

所有 AI 图像生成器的运作核心都离不开两个紧密关联的关键环节:深度解析提示词意图,并据此精准生成对应的视觉图像。

挑战一:准确解析用户意图。 当您输入“光影壮丽的山间落日”时,系统需通过自然语言处理技术将其转化为可执行的视觉概念,从而精准洞察“壮丽”在视觉层面的具体呈现,并推演落日余晖与山脉阴影之间的交互逻辑。

问题之二:如何精准生成像素。 为了完美呈现您的创意指令,系统需要计算并输出数百万个色彩数值,以构建出结构连贯、光影逼真且透视准确的视觉对象,而这正是计算机视觉技术的核心体现。

现代系统利用神经网络有效解决了上述挑战。这一计算架构的灵感源自人脑神经元间的连接与通信方式,从而构建出能够模拟生物神经机制的高效计算模型。

神经网络:技术基石

在深入探究具体的系统架构之前,首先了解神经网络处理图像的底层逻辑将大有裨益。

不同于人类的视觉直观,计算机通过处理庞大的数字网格来“阅读”图像。以一张 512×512 像素的彩色图像为例,其背后包含了多达 786,432 个独立数值,而神经网络的核心任务便是从这些海量数据中精准捕捉并识别其内在规律。

在训练过程中,神经网络通过处理数以百万计的图像进行学习。以 Stable Diffusion 为例,该模型在包含约 58.5 亿组公开网络图文对的 LAION 5B 数据集上进行了深度训练,凭借对图像关联文本(如替代标签、标题及上下文内容)的解析,从而使模型能够精准掌握语言与视觉概念之间的内在联系。

在此过程中,神经网络能够分层次地学习图像模式:从捕捉边缘与基本形状的浅层网络,到识别眼睛、轮子或叶片等局部细节的中层网络,最终在深层网络中实现对整体概念与艺术风格的全面理解。

从 GAN 到扩散模型:探究 AI 绘图技术的演进历程

近年来,AI绘图领域经历了翻天覆地的演化;通过深入了解这一演进过程,我们便能洞察为何如今的AI生图工具在效果与性能上,已远非三年前的产品所能比拟。

GAN 时代 (2014~2021)

自 2014 年由伊恩·古德费洛(Ian Goodfellow)提出以来,生成对抗网络(GAN)凭借让两个神经网络互相对抗的精妙构想,在过去数年间一直占据着行业的主导地位。

生成器专注于模拟逼真图像,而判别器则致力于识破其中的伪装;随着判别器鉴别能力的日益精进,生成器也必须通过持续迭代来寻求突破。这种博弈般的“军备竞赛”促使两个网络在相互竞争中共同进化,从而实现极致的图像生成效果。

截至2019年,GAN技术已取得显著突破,StyleGAN甚至能生成真假难辨的虚构人脸。然而,其局限性也逐渐显现:由于内部两个网络难以保持同步,导致模型训练极不稳定,且在处理多物体的复杂场景或手部等精细细节时,往往显得力不从心。

扩散模型引领的技术革命(2020年至今)

探索 AI 绘图背后的技术原理,揭秘扩散模型如何凭借数学算法将提示词精准转化为细腻的像素图像。

探索 AI 绘图背后的技术原理,揭秘扩散模型如何凭借数学算法将提示词精准转化为细腻的像素图像。

2020 年,加州大学伯克利分校的 Jonathan Ho、Ajay Jain 与 Pieter Abbeel 联合发表了名为《去噪扩散概率模型》(DDPMs)的重量级论文,这一突破性成果从此彻底改写了 AI 图像生成领域的发展进程。

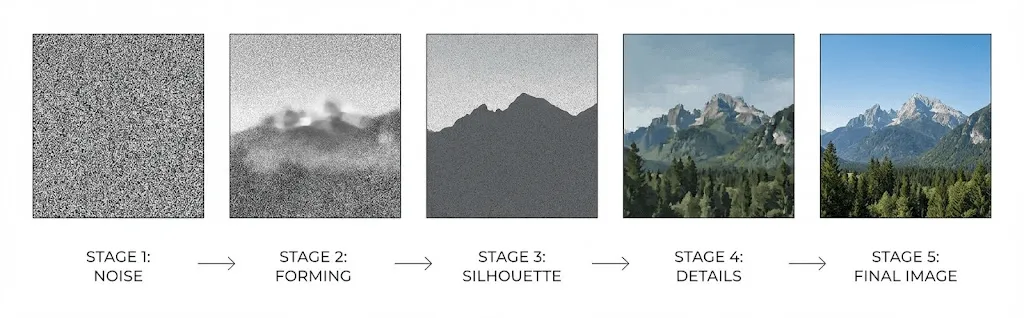

扩散模型的核心原理在于学习如何逆转噪声:通过向原始图像逐步添加噪声直至其变为纯粹的随机像素,并训练神经网络精准地执行反向还原,从而将杂乱无章的静态画面一步步“去噪”并重塑为精美的图像作品。

前向扩散过程:通过对训练图像执行多达 1,000 步的渐进式高斯噪声处理,使图像特征在不断叠加的过程中逐渐消失,最终演变为完全无法辨识的随机静态噪点。

反向扩散过程:通过训练神经网络精准预测并逐级去除噪声,从而在纯粹的随机状态中逐步重构出清晰且连贯的图像。

相比 GAN,这种循序渐进的生成方式无需平衡复杂的对抗博弈,在稳定性方面具有先天优势,不仅能产出更多样化的结果,更在理解与执行复杂提示词方面展现出更高的可靠性。

2021年,Dhariwal 与 Nichol 发表了具有里程碑意义的论文《Diffusion Models Beat GANs on Image Synthesis》,正式宣告扩散模型在图像合成领域确立了领先地位。

AI 文本转图片的工作原理:从提示词到像素的全流程解析

当您在 Stable Diffusion、 DALL-E 或 Midjourney 中输入提示词时,其背后的技术运作逻辑如下:

第一步:通过 CLIP 进行文本编码

您输入的文本首先会通过由 OpenAI 开发的 CLIP(对比语言-图像预训练)等文本编码器进行解析处理。

CLIP 通过对 4 亿组图像文本对的深度训练,建立了语言与视觉概念之间的紧密关联,能够将您的提示词精准转化为包含丰富语义的高维向量(通常为 768 或 1024 维)。

这些向量均位于共享的“嵌入空间”内,通过将含义相近的概念进行聚类,使“狗”与“小狗”能够产生相似的向量表现,而“狗”与“摩天大楼”等差异巨大的概念则会生成截然不同的向量。

第二步:深入潜空间进行处理

这一过程的巧妙之处在于,为了降低直接处理高分辨率图像所带来的巨大算力成本,现代系统通常在压缩后的“潜空间”(Latent Space)中运行,从而实现更高效的图像生成。

Rombach 等人在 2022 年发表的 Stable Diffusion 论文中证明,在压缩空间内进行扩散处理不仅能保持图像的高质量,更实现了技术上的重大突破,从而让这一顶尖科技能够真正走入大众视野并广泛普及。

Stable Diffusion 能够将 512×512 的图像(含 786,432 个数值)深度压缩为仅有 16,384 个数值的 64×64 潜空间表示。凭借这种高达 48 倍的压缩效率,用户无需依赖昂贵的数据中心,在消费级 GPU 上即可实现高效运行。

图像生成始于潜空间中的随机噪声,您可以将其理解为一张经高度压缩、充满模糊噪点的初始画布。

步骤三:迭代降噪

接下来进入核心处理环节:凭借最初为医学图像分割而设计、因其形状酷似字母“U”而得名的 U-Net 神经网络架构,系统通过通常为 20 至 50 次的迭代循环执行精细的去噪处理。

在每一个生成阶段,U-Net 都会接收以下输入信息:

- 当前阶段的噪声潜空间表征

- 经 CLIP 编码处理的提示词文本嵌入

- 用于标识生成进度的步数时间戳

网络通过预测并精准剔除图像中的噪声,在初始阶段确立整体构图与主要轮廓,随后在后期步骤中不断精雕细琢,丰富画面的纹理与细节。

借助“交叉注意力”机制,文本嵌入能够深度引导生成过程。神经网络会精准捕捉提示词中的关键信息,从而在每一个位置上决定内容的增删与构建。

第四步:解码并还原为像素图像

去噪完成后,解码器(变分自编码器或 VAE)会将压缩的潜空间表示还原至全分辨率,通过这一“上采样”过程精准重构最初被压缩的图像细节。

CFG (引导强度):决定提示词还原度的核心参数

在使用 Stable Diffusion 进行创作时,您一定见过“CFG”或“提示词引导系数(Guidance Scale)”,这一参数直接决定了模型对提示词的遵循程度。虽然多数用户习惯沿用教程推荐的默认值 7,但深入理解其运作机制,将助力您更精准地掌控最终的生成效果。

CFG 全称为“无分类器指导”(Classifier Free Guidance),在扩散模型的每个去噪步骤中,系统实际上都会执行两次运算:

- 结合您输入的提示词,系统将根据特定的文本描述,构思并推演图像应有的视觉呈现。

- 在完全不使用提示词的情况下,系统则会生成一张“通用”图像,以确定画面在无特定指引时的基础样貌。

最终生成效果取决于两组预测值之间的差异;通过调高 CFG 值,您可以显著增强这种对比,从而使生成的图像更精准地契合提示词的核心意图。

然而,这种方式也需要面临一定的权衡:

- 低 CFG 指数 (1-5):能够赋予 AI 更多创作自由,但有时会偏离提示词的具体描述

- 中 CFG 指数 (7-12):是兼顾生成质量与准确度的平衡点,也是最为推荐的理想区间

- 高 CFG 指数 (15+):虽然能严密遵循指令,但过高的数值易导致画面色彩过饱和或产生视觉伪影

主流 AI 绘图工具深度对比:DALL-E、Midjourney 与 Stable Diffusion

尽管目前主流的 AI 生图工具均已采用扩散模型,但在具体的技术架构与实现路径上,各家产品仍存在显著差异。

DALL-E 3 (OpenAI)

OpenAI 巧妙地将 ChatGPT 直接融入生图流程,通过 GPT 4 在生成前对提示词进行重写与扩展,使得 DALL-E 能够对简单指令进行极具深度的视觉化诠释。这种模式在极大地降低创作门槛的同时,更突破了 AI 绘图领域长久以来难以精准渲染文字的技术瓶颈;虽然对于追求极致精准控制的高阶用户而言其自主发挥较多,但在理解复杂意图和文字还原方面表现尤为卓越。

Midjourney

Midjourney 的模型设计更倾向于艺术审美的表达而非死板的字面还原,这使其生成的作品常带有独特的“绘画感”或“电影质感”,即便在某些细节上未完全遵循提示词,其视觉表现力也往往比其他生成器更胜一筹。尽管基于 Discord 的交互方式不同寻常,但却由此孕育了极具活力的创作社区;而在技术底层的透明度方面,Midjourney 则比竞争对手更为谨慎保守。

Stable Diffusion

作为开源领域的首选方案,它支持本地化部署,让您在全面掌控运行机制的同时能够自由进行深度定制。凭借高度的开放性,该方案已催生出包含微调模型、LoRA(用于引入特定概念的低秩自适应技术)及各类扩展插件在内的繁荣生态。虽然其学习曲线相对较陡,但对于追求极致控制力、数据隐私或有自定义模型训练需求的用户而言,这无疑是最佳选择。

Adobe Firefly

本模型完全基于 Adobe Stock 图像、公开授权内容及公有领域作品训练而成,凭借出色的版权合规性,能够完美满足商业用途对法律风险的严格要求。在实现与 Photoshop 和 Illustrator 深度集成的同时,其设计理念更趋稳健,可有效规避争议性或前卫内容的生成,确保输出结果安全可靠。

超越基础,探索更高境界的 AI 创作

文本生图仅仅是 AI 创作的起点,当今的先进系统还支持多项值得深入了解的扩展功能。

以图生图 (img2img)

与其从纯随机噪声开始,您可以选择在现有图像的基础上添加部分噪声,并通过调节“重绘强度”参数来精准掌控噪声比例,从而决定生成结果对原图的偏离程度。较低的强度设置能带来细腻的风格转变,而高强度则可在保留原图构图框架的同时,实现大刀阔斧的创意重塑。

局部重绘与画面延展

局部重绘功能支持在保留画面主体的前提下,通过对特定遮罩区域的重构来精准消除杂物或替换元素;而扩图技术则能让创作突破原图边界,自动生成与既有场景深度契合且衔接自然的延伸内容。

ControlNet

ControlNet 为图像生成过程引入了结构化引导,支持通过边缘图、深度图、人体骨架或分割遮罩来精准锁定元素布局。这不仅是维持角色设计一致性的核心利器,更在空间控制的精度上突破了单纯提示词的局限,让构图创作更加精准可控。

LoRA 与 DreamBooth

想要让 AI 生成训练数据之外的特定人物、产品或视觉风格?借助 LoRA (Low Rank Adaptation) 和 DreamBooth 技术,您只需通过 20 至 30 张图片的少量自定义数据集进行模型微调,即可让 AI 具备按需精准生成特定概念的能力。

当前的局限性及其成因

深入了解 AI 绘图的局限性与常见偏差,将助您在创作中更有效地规避这些问题。

备受诟病的手部生成难题

众所周知,AI 生成器在处理手部细节时,常会出现手指数量错误、指头粘连或结构畸形等不合常理的情况;但这并非单纯的程序漏洞,而是其底层逻辑所面临的一项根本性技术挑战。

在训练数据中,手部的呈现角度、位置及遮挡情况极其复杂多变,且因其在整张图像中占比微小,导致在模型训练过程中获得的关注度较低。相比面部等特征较为稳定的部位,AI 捕捉正确手部结构的统计规律也更具挑战;尽管近期模型已有所改进,但这目前仍是一个普遍存在的技术难点。

文本渲染

在 DALL-E 3 问世之前,生成清晰可辨的图像文本几乎是难以实现的,这源于模型虽能理解词汇语义,却难以精准还原字体的视觉排版。尽管 DALL-E 3 现已在这一领域取得显著突破,但在目前的各类生成平台中,处理复杂的文本布局依然面临着可靠性挑战。

保持生成图像的一致性

由于 AI 图像生成过程始于随机噪点,确保同一角色或场景在多次生成中保持高度一致始终是一项挑战。虽然锁定种子、使用参考图或角色 LoRA 等技术能起到一定辅助作用,但目前尚无完美的解决方案,这在很大程度上限制了漫画连贯创作及品牌形象开发等深度应用场景。

空间推理能力

虽然模型能够精准识别单个物体,但在面对如“红球在蓝色立方体左侧,且立方体位于绿色金字塔后方”等复杂描述时,往往难以准确呈现空间布局,在处理多元素间的方位关系上仍显吃力。

深入解析版权归属问题

正是在这一环节,法律与伦理层面的考量变得愈发错综复杂。

训练数据

大多数 AI 图像模型通常利用从互联网抓取的数十亿张图片进行训练,但由于这一过程往往未经原作者明确授权,已引发多起关于其是否构成版权侵权的法律诉讼,相关法律界限目前仍处于尚不明朗的探索阶段。

生成内容的所有权

美国版权局规定,版权保护的核心在于人类创作,因此纯AI生成的图像通常无法获得版权;但若作品在创作过程中包含“充足的人类创意投入”,则仍有可能符合申请资格。目前,关于这一法律界限的精确界定尚不明晰,且多项相关争议正处于积极的诉讼进程中。

此外,平台的服务条款同样不容忽视。虽然多数商业平台都会授予用户生成图像的相关权利,但在针对具体场景进行应用时,建议您仍需仔细研读条款细则以确保合规。

提升生成效果的实用技巧

深入理解底层技术逻辑,能助您更精准地打磨提示词并显著提升创作效果。以下是为您总结的实操要点:

优先掌握核心概念

文本编码器会根据词语所在的位置动态分配权重,例如“日落、戏剧性光影、山脉景观”与“日落时分的山脉景观”在生成效果上便会有所侧重,因此建议您将最重要的核心元素置于提示词的最前端。

引用模型熟知的参考要素

AI 模型基于海量数据训练而成的特性,使得在撰写提示词时,引用具体的艺术家、艺术流派或摄影器材(如 Kodak Portra 400 胶片感)比抽象描述更能稳定地触发特定的视觉模式。例如,使用“伦勃朗光”这一专业术语,其光影精准度远比“戏剧化侧光”等笼统表达更胜一筹。

注重持续迭代,而非苛求一步到位

优秀的 AI 生图作品往往需要多次打磨,而非一蹴而就。建议您通过生成多个变体并不断精炼提示词,从中筛选出最理想的效果;同时,配合使用 img2img 功能,您可以在保持整体构图稳定的基础上,针对特定细节进行精细化的迭代优化。

利用负向提示词精准把控生成效果

反向提示词能够精准排除模糊、扭曲、多指、水印及低质量等元素,通过在去噪过程中主动削减此类特征的影响,有效规避常见的生成瑕疵。建立完善的反向提示词库,是确保持续输出高质量图像的关键。

精彩前瞻

AI 绘图领域正处于飞速发展的进程中,以下几项前沿动态值得重点关注:

- 凭借 Sora 和 Runway Gen 3 等前沿技术的推动,扩散模型已成功扩展至视频生成领域,让高质量的文本转视频正逐步成为现实。

- 3D 生成技术正迅速成熟,通过文本或图像即可快速构建 3D 模型,将深刻改变游戏开发、产品可视化及虚拟现实(VR)等行业的创作流程。

- 随着算法优化的不断深入,实时生成已迈向交互级速度,部分应用场景甚至实现了不足一秒的极致响应。

- 全新架构正致力于解决角色与场景的一致性难题,这一关键进步将全面开启 AI 在漫画创作与动画制作等领域的高端应用潜力。

常见问题解答

AI 图像生成需要多长时间?

云端服务生成图像通常耗时 10 至 30 秒,而通过现代 GPU(如 RTX 3060 或更高配置)运行本地 Stable Diffusion,仅需 2 至 5 秒即可生成 512×512 的图像;此外,生成时长会随分辨率的提升及迭代步数的增加而相应延长。

AI 绘图工具是否只是在简单复制现有图像?

事实并非如此。AI 并非简单地存储图像副本,而是通过学习海量数据中的统计规律来进行创作。尽管如此,部分知名度极高的图像可能会在一定程度上被模型“记忆”,当用户输入特定艺术家的风格提示词时,生成的作品往往会呈现出明显的风格痕迹,而这正是目前版权争议最为激烈的核心所在。

AI 绘图中的手部难题:为什么精准刻画细节如此困难?

由于手部在训练数据中的呈现角度、位置及可见度极其多变,且在全身构图中占比有限,导致模型在训练过程中往往难以捕捉到充足的特征细节。相比于形态较为稳定的元素,手部的统计规律更具复杂性;尽管这一难题正随着技术演进而不断改善,但在目前仍是 AI 生成领域中一项极具挑战性的课题。

AI 生成的图片可以用于商业用途吗?

作品权属通常取决于所选平台及您所在的司法管辖区。虽然多数商业服务均在服务条款中明确授予了商用权限,但在美国等部分地区,纯粹由 AI 生成的内容目前可能无法获得版权保护。相比之下,Adobe Firefly 专为商业场景打造,由于其模型训练完全基于合规授权内容,能够更好地保障商业使用的安全与合规。

扩散模型与生成对抗网络 (GAN) 的核心区别

相比于利用生成器与判别器相互对抗的 GAN(生成对抗网络),扩散模型则是通过学习如何逆向还原逐步加噪的过程来生成图像。凭借着更高的训练稳定性、更丰富的输出多样性以及对提示词更精准的遵循能力,扩散模型已成为当前 AI 绘图领域的主流技术。

核心总结

AI 绘图并非魔法,其核心是依托扩散模型构建的精密系统。通过深度理解文本语意并结合海量的视觉模式,该技术能够将文字描述转化为分步引导的去噪过程,在不断的迭代优化中将创意构思精准转化为高质量图像。

当您输入提示词后,系统会通过经由数亿组图文数据训练的文本编码器,在压缩的潜空间内引导去噪流程,并最终将其解码还原为高分辨率图像。即便只是初步了解这一生成逻辑,也能助您更高效地驾驭 AI 绘图工具。

AI 生图并非简单的愿望输入,而是通过提供引导信号来驱动严密的数学程序。在此过程中,系统将精准解析您的语言指令,并基于习得的模式引导去噪,最终实现图像从底层逻辑到视觉呈现的全面重构。

深入了解这些原理不仅能满足您的好奇心,更能助您精准优化提示词,在建立合理预期的同时,针对特定需求挑选最契合的工具。随着 AI 技术的飞速更迭,这些扎实的底层知识将为您打下坚实基础,助您从容应对未来的技术变革。